The Latency Challenge: Scaling Real-Time AI Voice Analysis

The Latency Challenge: Scaling Real-Time AI Voice Analysis

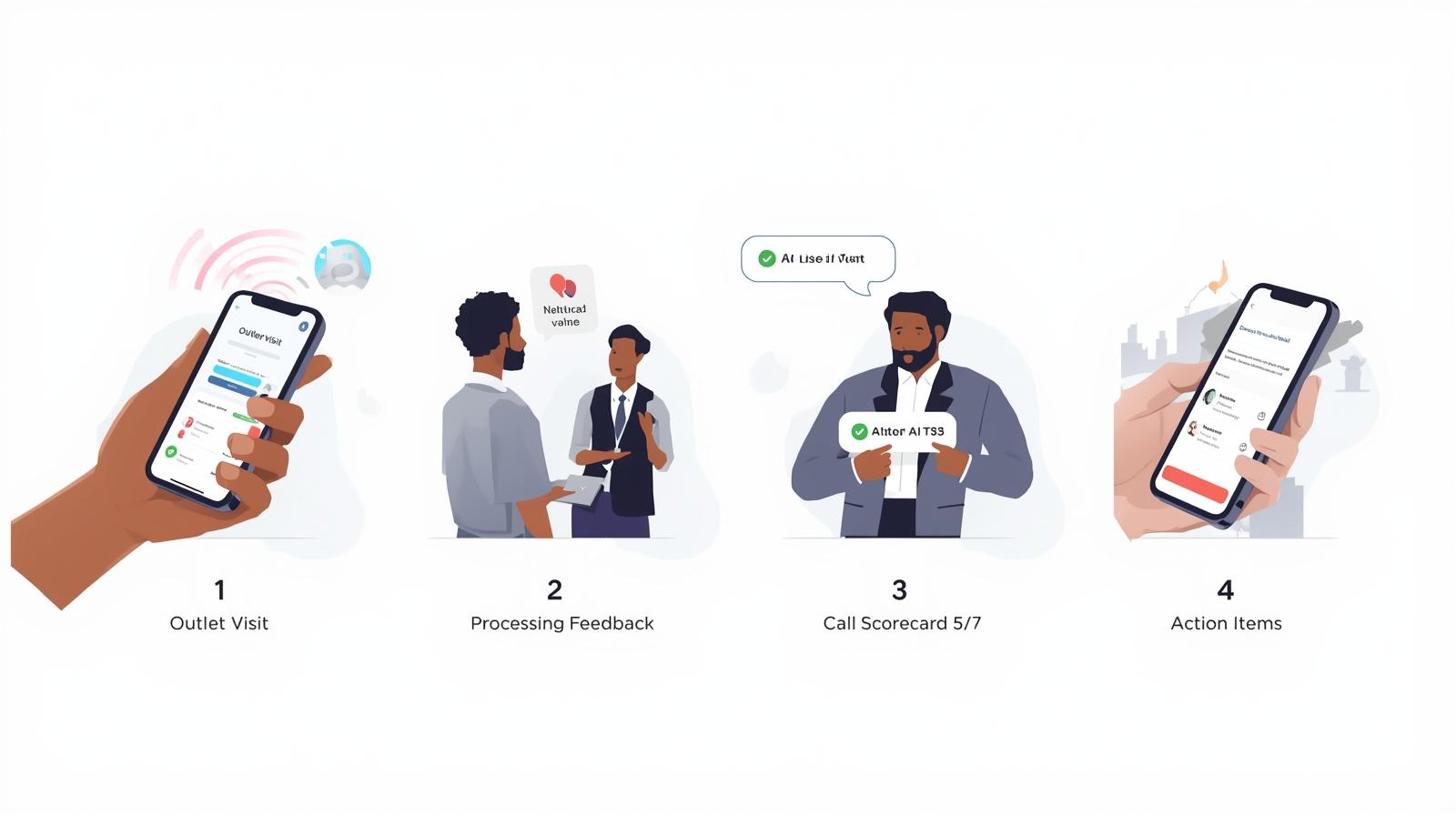

In the world of Field Sales, timing is everything. Over the last few weeks, I’ve been diving deep into a specific engineering challenge: How do you provide near-instant AI feedback on voice conversations in areas with unpredictable internet?

Working on scaling Bizom's voice-first AI pilot to 2,000+ field sales users has taught me that the "AI model" is only 20% of the puzzle. The other 80% is the audio pipeline.

1. Data Geometry: Why Less is More

In the office, you use high-quality audio. On the field, high-quality is a liability. A large audio file on a patchy network is a bottleneck that no amount of AI speed can fix.

The shift: We moved from "High Quality" to "High Efficiency." By optimizing the sample rate and switching to mono-channel streams, we reduced our data payload by over 60%. The AI didn't lose accuracy, but the user gained 10 seconds of their life back.

2. Adapting to the Environment (2G/3G/4G)

The most significant hurdle was the "connectivity gap." Salesmen move between high-speed 4G zones and concrete warehouses with barely a 2G signal.

We utilized a transport layer that features Adaptive Bitrate. Instead of the connection breaking when the signal dips, the codec "squeezes" the audio. It prioritizes the human voice over background noise, ensuring the AI receives a continuous stream of data regardless of the bars on the phone.

3. Architecture for Concurrency

When 10 or 100 users hit a server simultaneously, "Burstable" performance is a risk. We learned that for real-time audio, consistent compute power is mandatory.

- Memory over Disk: We designed the system to handle audio processing entirely in RAM. By avoiding "disk I/O" (writing files to a hard drive), we shaved off hundreds of milliseconds.

- Regionality: We placed our infrastructure in the same region as our users (Mumbai). In a low-latency game, the speed of light matters.

4. The "Gateway" Strategy

To keep the backend secure and performant, we used a reverse-proxy setup. This allowed us to:

- Handle SSL termination efficiently.

- Route traffic to specific internal high-speed ports.

- Protect the core AI logic from direct public exposure.

Final Thoughts

Building this pilot showed me that Engineering for the Field is different from Engineering for the Web. You have to respect the constraints of the network and the hardware. When you optimize the "plumbing" of the audio, the AI finally feels like magic.