Comparing Sarvam AI and OpenAI Whisper - A Deep Dive into Indian Language Transcription

Comparing Sarvam AI and OpenAI Whisper: A Deep Dive into Indian Language Transcription

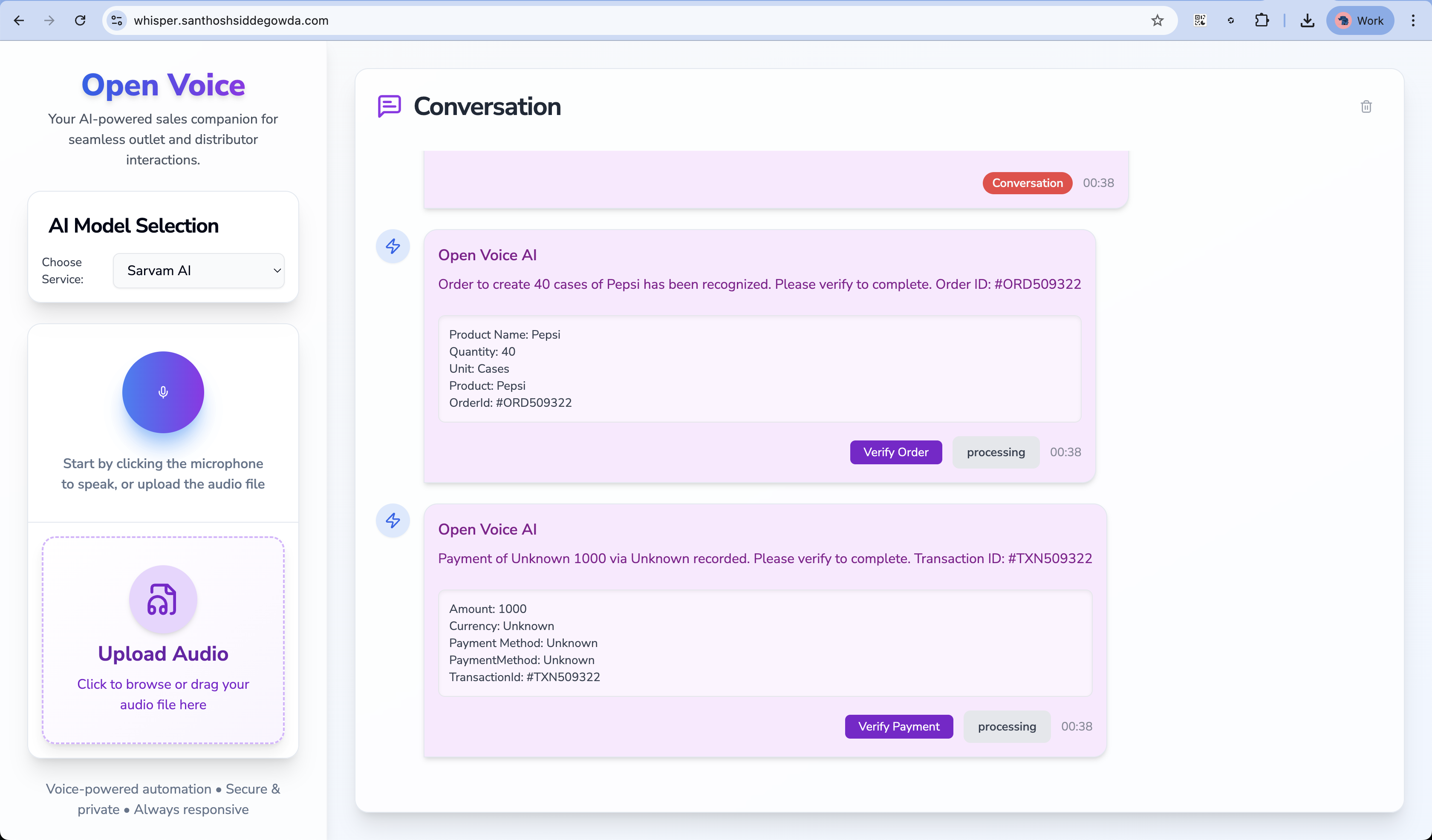

In this article, I'll share my hands-on experience using two speech-to-text transcription solutions: Sarvam AI and OpenAI Whisper. I've worked with both in real-world scenarios and will walk through their accuracy, integration process, and how well they perform under different conditions—especially for Indic language use cases.

Sarvam AI: My Experience

Sarvam AI is built with a strong focus on Indian languages and dialects, making it a great option for transcription tasks involving regional content, especially Kannada. I also experimented with Hindi, Hinglish, and Kanglish, and here's what I found.

🔧 Key Features & Usage

Sarvam AI provides pretrained ASR models specialized in Indian linguistic patterns, with a particular emphasis on Kannada. It's primarily accessed via API.

# Example: Sarvam AI API integration

import requests

url = "https://api.sarvam.ai/v1/asr"

headers = {

"Authorization": "Bearer YOUR_SARVAM_API_KEY",

"Content-Type": "application/json"

}

data = {

"audio_url": "YOUR_AUDIO_FILE_URL",

"language": "kn" # Example: Kannada

}

response = requests.post(url, headers=headers, json=data)

print(response.json())

✅ Performance and Observations

Sarvam AI performed impressively for Kannada, demonstrating high accuracy and robustness in noisy environments. It stood out with regional accents and was more accurate than more generalized models. While latency was fine for batch jobs, real-time responsiveness needs improvement.

One thing I appreciated was its domain-specific handling of code-mixed language, which is quite common in everyday Indian speech, especially in Kannada.

I also tested Sarvam AI with Hindi, Hinglish, and Kanglish. The model handled Hindi well, but its performance with Hinglish and Kanglish was mixed. It struggled with rapid code-switching and slang, which are common in these hybrid languages. However, for more formal or slower-paced content, it still provided decent results.

OpenAI Whisper: My Experience

Whisper by OpenAI is known for its broad multilingual capabilities and high transcription quality, especially in English and major global languages.

🔧 Key Features & Usage

You can use Whisper via OpenAI's API or run it locally using their open-source models.

# Using Whisper via OpenAI API

from openai import OpenAI

client = OpenAI(api_key="YOUR_OPENAI_API_KEY")

with open("/path/to/audio.mp3", "rb") as audio_file:

transcript = client.audio.transcriptions.create(

model="whisper-1",

file=audio_file

)

print(transcript.text)

For offline/local inference:

# Run Whisper locally

pip install -U openai-whisper

whisper audio.mp3 --model base

Whisper supports various model sizes (tiny, base, small, medium, large) depending on your hardware.

✅ Performance and Observations

Whisper is very strong with English content. It handled different accents and background noise well. I was impressed by its multilingual transcription and translation features—especially for a general-purpose model.

Compared to Sarvam, it lacked nuance in regional Indian languages but made up for it with consistent overall quality and availability of open-source tools.

🤝 Comparison Table

| Feature | Sarvam AI | OpenAI Whisper |

|---|---|---|

| Focus | Indian languages and dialects, especially Kannada | Multilingual, general-purpose |

| Accuracy | High for Indic languages, particularly Kannada | Excellent across many languages |

| Integration | API-based, simple setup | API or local open-source |

| Cost | Varies (depends on provider) | Pay-per-use (API), free locally (compute) |

| Strengths | Regional accuracy, Indian noise resilience, Kannada support | Global accuracy, translation, open-source |

| Weaknesses | Limited non-Indian language support, struggles with Hinglish/Kanglish | Struggles with Indian dialects occasionally |

🧠 Final Thoughts

If you're working with Indian regional languages, especially Kannada, Sarvam AI is incredibly effective and well-tuned for the job. It captures linguistic subtleties that global models might miss.

On the other hand, OpenAI Whisper is my go-to for multilingual or English-heavy workflows. Its open-source nature gives it an edge in flexibility and customization.

💡 TL;DR

- Choose Sarvam AI for: Kannada, Hindi, Tamil, Telugu, and other Indic use cases.

- Choose OpenAI Whisper for: English, multilingual projects, open-source control, or translation tasks.

Both are great tools, but they shine in different places. I'm glad I had the chance to explore both—and I'm excited to keep building more intelligent voice-based systems going forward.

Got feedback? Ping me on Twitter 🚀